Just in 2024, the global demand and market for artificial intelligence (AI) governance reached a high of $227.6 million and is projected to hit $1.4 billion by the end of the decade.[*] It is undeniable that there’s a shift in how organizations are taking their artificial intelligence deployment journeys. AI systems are making critical decisions in healthcare, employment, credit, and other high-stakes worlds, and it’s clear that oversight has never been more urgent than ever.

42% of AI adopters posit that they prioritized performance and speed over fairness, deploying biased and flawed systems in finance, hiring, and healthcare.[*] And while 73% of C-suite executives say they believe ethical AI frameworks are imperative, only 6% have walked the walk and developed them.[*] This guide is here to explain AI governance, discuss its implications and components, and discuss implementation.

- What is AI Governance?

- Why it's Important

- Key Components

- Core Principles

- Global Regulation

- Levels of Governance

- Implementation Steps

- Best Practices

- Conclusion

What is AI Governance?

AI governance is the systematic approach your organization takes to guarantee that AI systems operate in an ethical way that complies with any standing laws or regulations. It is meant to establish the standards, processes, and guardrails that AI needs throughout the lifecycle (from the opening developments to deployment and constant monitoring).

The purpose has to mean more than just complying with the law; it’s assurance that your AI systems actually respect fundamental human rights, work fairly across user groups (regardless of demographics), and form outcomes that align with both societal and organizational tenets. Effectively, AI governance is about accountability; it draws lines as to the AI’s roles and responsibilities, while establishing decision-making protocols to address AI risks before they become real-world consequences.

Why is AI Governance Important?

AI governance has transcended mere theoretical concern to actual business imperatives as organizations face rising pressure from customers, stakeholders, and regulators to show AI systems are running responsibly. Just last year, the US federal agencies issued 59 AI-related standards versus just 25 in 2023.[*] This marks a rapid rise in regulatory oversight that companies cannot afford just to wave off.

And, beyond legal requirements, AI governance protocols touch on business needs. Consumer trust in AI companies to protect personal information dropped from 50% to 47% in just a year’s time.[*] Those surveyed said these factors played a part in AI governance’s rise:

- A need for transparency and accountability in decision-making processes

- Skepticism over the prevention of algorithmic bias or discriminatory outcomes

- Desires to avoid financial penalties, legal liabilities, or reputational damages caused by faulty AI

- Building trust in stakeholders where the top challenges were security concerns, workforce impact, or ethical implications[*]

- Compliance with evolving laws or regulations across major industries

Key Components

A comprehensive approach to AI governance requires you to realize that many elements interlock to form a framework that tackles risks effectively. We’ve laid out the common denominators behind an effective strategy:

Ethical Guidelines

Ethical frameworks serve as the north star for your programs. Imagine these as the moral principles that guide AI development and deployment. 54% of leaders indicated cognitive technologies like LLMs posed the most severe ethical risks of newer tech.[*]

Many said their top concerns for GenAI are data privacy, transparency, and data provenance. These guidelines define which use cases are acceptable or ethical, prohibited actions, and the values that should inform AI decision-making.

Regulatory Frameworks

Legal compliance structures ensure organizations meet requirements across jurisdictions. Legislative mentions of AI increased by 21.3% across 75 countries globally in 2024. Organizations must map their obligations and implement controls to demonstrate adherence to applicable laws.[*]

Accountability Mechanisms

Clear ownership and responsibility structures define who makes decisions about AI systems. This also means marking who bears responsibility for outcomes that do not align with corporate or societal values.

The good news is that a sweeping majority (93% of business leaders) believe humans must be involved in AI decision-making.[*] This highlights the need for human oversight mechanisms that amount to meaningful control over automated systems and those who use them.

Risk Management

This facet deals with the systematic approaches to identifying, assessing, and mitigating AI risks throughout the system lifecycle. All of these mechanisms are what allow organizations to address potential harms preemptively to prevent disasters. Organizations must continuously monitor their AI systems to detect emerging risks as models evolve and deployment contexts change to maintain good faith with stakeholders and users.

Data Governance

Controls over data quality and usage serve as guardrails for how AI systems train on datasets deemed appropriate and fair. A whopping 77% of business leaders express concern over both the timeliness and reliability of underlying data.[*] This calls into question AI governance's role in assuring there is trust towards AI systems overall.

Transparency and Explainability

Any AI-using organization must be able to justify and rationalize how its AI systems reach decisions. The journey to being able to do so lies in documenting training data, model architectures, and decision logic that any stakeholder can read in plain language and "get."

Core Principles

Here is a short list of the principles that will shepherd your responsible AI development and deployment efforts as you consider AI governance.

Fairness

AI systems need to treat any and all individuals or groups in an equitable and ethical manner. However, as it stands, it doesn't seem to be the case. AI resume screening systems allegedly preferred white-associated names nearly 85% of the time versus any Black-associated names just 9% of the time.[*] Eliminating biases such as these is a must to ensure your AI is fair.

Accountability

AI often tempts the belief that the humans behind these algorithms are exempt from responsibility. That adage is wrong. Clear lines of responsibility must be drawn to ensure humans still maintain responsibility for AI system outcomes. Organizations have the onus of establishing who authorizes deployments, who keeps watch for performance, and who steps in when systems harm others.

Transparency

Any relevant stakeholder needs to be able to see how AI systems operate on both a granular and existential level. This means disclosure of when AI systems are used, what data these systems are drawn from, and how automated determinations can be challenged by human operators.

Privacy

Protection of personal information and respecting an individual’s rights to privacy cannot be overwritten by AI’s need to complete its task. 57% of consumers say that AI is a big threat to their privacy, making it a prime directive for privacy protections to exist to maintain user trust at large.[*]

Security

84% of organizations claim that cybersecurity risks are a top concern and deterrent for AI usage.[*] This level of concern backs the fundamental truth that a robust cybersecurity strategy must be put into place to prevent unauthorized manipulation, access, or usage of AI systems and data fed into them.

Empathy

Most importantly, AI systems need to weigh human dignity and impact at every level of their design. Technology that serves human needs is the goal; if your technology is something that forces human compromises because of its own technological limitations, it has failed.

Challenges

Organizations have a sheer volume of obstacles that make the implementation and effectiveness of AI governance difficult and seemingly an afterthought. We have identified some of the many challenges organizations worldwide face:

Complex Technology Understanding

AI systems, especially those that consist of large language models, work in ways that mystify even their own creators. It is said that 51% of groups using AI admit that they don’t quite have the right mix of in-house talent that knows AI well enough to bring their strategies to life.[*] These knowledge gaps undermine any efforts for proper AI governance.

Lack of Accountability

A lack of a clear ownership structure makes it hard to assign responsibilities for AI outcomes that lead to unfair and often dangerous situations. Organizations need to find a way to assign accountability as multiple teams contribute their skill sets to ensure AI systems work. Even more so when proprietary models take the stage. Teams need to own up to what facets they worked on.

Bias in Data and Algorithms

It is said that a vast majority of LLMs are actually trained on scraped datasets taken from the open web, a place where women are underrepresented in about 41% of professional contexts. Additionally, minorities appear 35% less often in those given spaces.[*] These biases enmesh themselves in AI systems and only grow over time.

Balancing Innovation with Regulation

A form of corporate FOMO (fear of missing out) has led to a horse race in getting out viable AI systems. That being said, a staggering 32% of business leaders say they rushed AI adoption, while 58% of those surveyed said they failed to apply AI ethics training in their processes.[*] These quick advancements may feel like one step forward, but rising regulations represent many steps backwards.

Lack of Standardization

Divergent requirements across the different sectors lead to compliance complexity that just creates a hodgepodge of moving targets. It is not a quick game where satiating regulators in one region means you satisfy all regions. You must navigate through all obligations in any region you decide to deploy AI in.

Rapid Evolution

AI features and functions are moving faster than governance frameworks can anticipate, as by the end of the decade, AI governance software spending will grow to nearly $16 billion and take about 7% of the overall spend on AI.[*] It’s exponential growth, not a glacial pace.

Collaboration Constraints

To effectively govern AI usage, you will need to ensure that all sectors of your own business are aligned (which means the business units, legal departments, technical teams, external stakeholders, and more have to be on the same page). The top challenges facing building AI ethics processes are skill gaps (at 49%), executive buy-in (41%), and leadership (40%).[*]

Global Regulations

Regulatory frameworks are not some domestic quibble, but rather a worldwide concern as countries scramble to govern AI systems. Organizations must understand the requirements in jurisdictions where they operate.

EU AI Act

The European Union's framework takes a risk-based stance on the establishment of proper AI regulation. Non-compliance with prohibited AI practices can result in hefty and prohibitive fines going up to a staggering 35 million EUR (or 7% of a company's annual turnover, whichever is higher).[*]

Other violations can net fines up to 15 million EUR or 3% of annual worldwide turnover, while providing incorrect information can result in fines up to 7.5 million EUR or 1% of turnover.

US SR-11-7

Banking regulators in the United States issued guidance requiring financial institutions to establish model risk management frameworks.[*] These requirements apply to AI systems used in lending, risk assessment, and other banking functions.

Canada's Directive on Automated Decision-Making

Canadian federal institutions are now legally forced to conduct algorithmic impact assessments to ensure that stakeholders are informed before deploying automated decision systems. The directive requires transparency, accountability, and human intervention mechanisms.

China's Interim Measures

Chinese regulations require security assessments before AI systems launch publicly. Regulators emphasize oversight of generative AI systems and mandate that providers implement mechanisms to prevent the generation of illegal content and protect user data privacy.

Singapore's Model AI Governance Framework

Singapore, an early player in AI governance and anticipatory modeling, created its Model AI Governance Framework in 2019 and even updated it in May 2024.[*] The framework is built on the tenets of stakeholder management, operations management, and guaranteeing appropriate levels of human involvement when evaluating applications for ethical AI use.

NIST AI RMF

The National Institute of Standards and Technology released its voluntary AI Risk Management Framework in January 2023. The framework provides guidance on identifying, assessing, and mitigating AI risks while promoting innovation.

OECD Principles

The OECD Principles on Artificial Intelligence were adopted by a consortium of 42 diverse countries in May 2019.[*] All OECD members and partner countries in the Arab region, Africa, and South America are now promoting the OECD AI Principles, establishing international standards for trustworthy AI that respect human rights and democratic values.

Levels of Governance

Organizations adopt AI governance at different maturity levels depending on their needs, resources, and regulatory requirements. Understanding these levels helps leaders assess their current state and stake out plans for further improvements.

Informal

Informal approaches are those that are values-based without formal policies or enforcement mechanisms, which characterize informal governance. Organizations rely on individual judgment and professional norms rather than documented standards.

This type of governance can fall victim to groupthink if members of the organization are not diverse and do not challenge norms.

Ad-Hoc

At an ad-hoc level, organizations develop policies to address or respond to emergent challenges or incidents; these tend to be more defensive than proactive. Governance emerges reactively versus more through systematic planning.

Unfortunately, this usually ends up forging gaps and inconsistencies organizationally and acts more like a band-aid than an outright solution.

Formal

A formal approach means forging comprehensive frameworks with documented policies, defined roles, risk assessment processes, and monitoring mechanisms. Organizations are increasingly seeking strong and scalable AI governance platforms rather than old ad-hoc compliance tools, fortifying a long-term vision and strategy.

AI Governance Implementation Steps

Building effective AI governance means forging together a multifunctional and team-focused effort to ensure AI works how it should. This is something methodical that requires long-term planning. Below is a skeleton of steps you can take:

Step 1: Conduct AI Audits

Inventory all of your working and active AI systems. This means parsing and understanding their individual risk profiles and foreseeing any potential gaps in current governance practices that need to be addressed. This baseline assessment reveals exactly which pockets of the operation the governance investments will go as you determine what has the greatest impact.

Step 2: Develop an Ethics Framework

Establishing principles that reflect organizational values and stakeholder desires is the goal of this step. You need to know exactly what it is your AI can and will do and what bounds ethically exist.

This is a universal issue, as it was found that only 35% of companies currently have an AI governance framework in place, meaning there is much work to be done across the gamut of business to regulate AI and ensure user safety.[*]

Step 3: Train Your Employees

Is your organization truly AI literate? If not, you must ensure that everyone is aware and ready. A sweeping majority (86% of employers) anticipate that AI will drive business transformation in the next five years, but are not committed currently to training their staff.[*]

Training needs to cover not just the technical concepts, but other humane elements like also ethical considerations and practical governance responsibilities to ensure accountability at large.

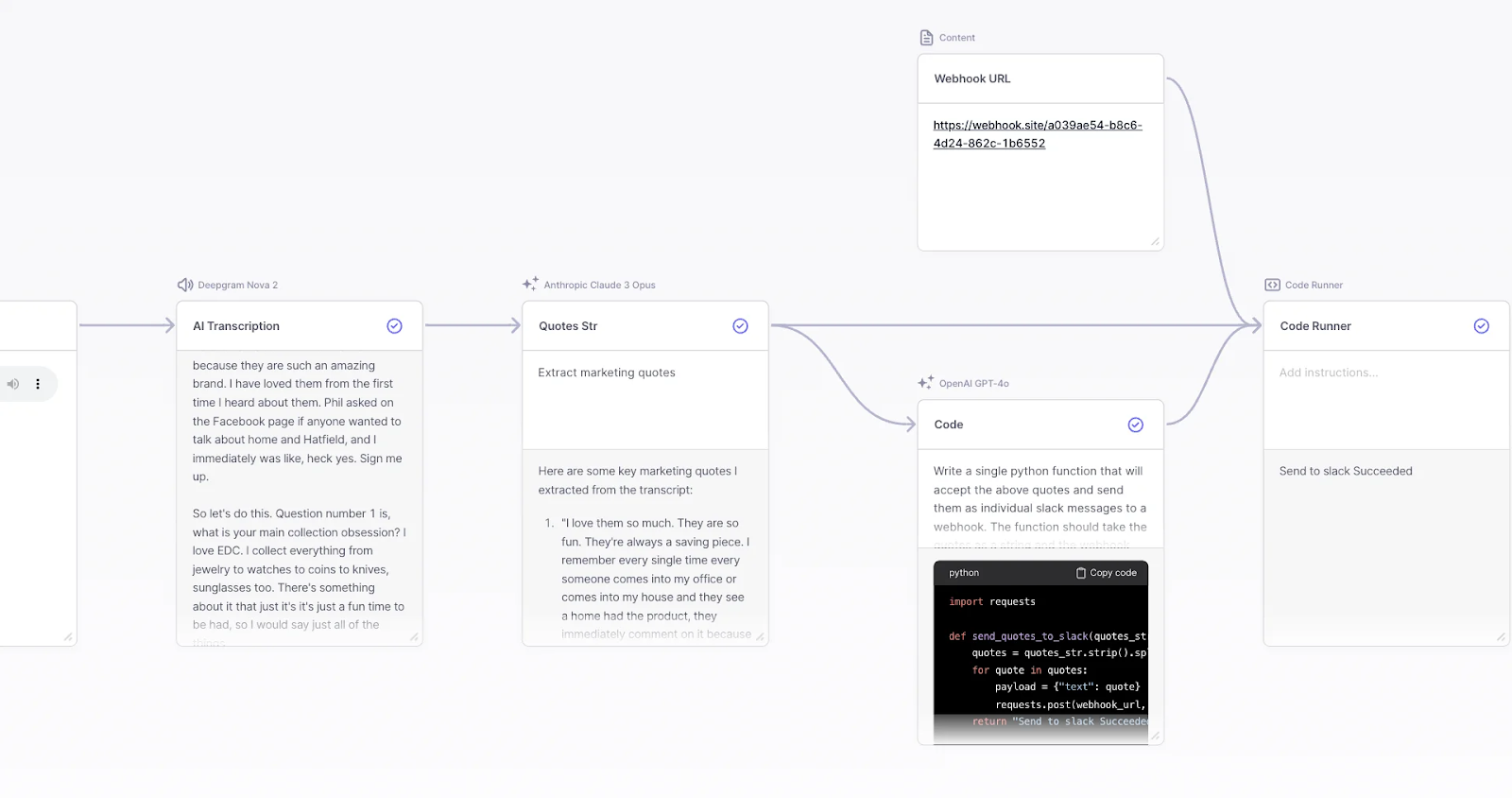

Step 4: Implement Monitoring Mechanisms

At this stage, you are already deploying critical tools and processes to track AI system performance. Special care must be taken when detecting anomalies and identifying any potential harm to stakeholders, users, and others.

Continuous monitoring is the key to ensuring that a rapid response protocol happens when catastrophic failures, such as data breaches, occur.

Step 5: Stay Informed and Aware

Do not fall asleep at the wheel or ever assume AI is a “set it and forget it” platform. You must do your due diligence in tracking regulatory developments, best practices, and emerging risks. 80.4% of U.S. local policymakers now support stricter data privacy rules, indicating that regulatory requirements will continue evolving.[*]

Best Practices for AI Governance

Leading organizations are those that are fully aware and ready to adopt practices that enhance their AI governance ability. These approaches are baseline ways to help translate fundamental goals into actualities and ensure you do your part in AI safety regulation.

Cross-Functional Collaboration

Do not allow silos to exist between technical teams, business units, legal departments, and compliance functions, as these teams need to work in tandem to prevent AI failure. Effective AI governance requires coordinated efforts across organizational boundaries, where responsibility is not merely being "it" in a blame game of tag but a team barometer of success.

Transparent Communication

You should disclose any and all AI use to stakeholders to ensure they are aware of what facets contain AI judgment. You will also need to explain how systems reach decisions to maintain transparency and fairness while working to provide channels for feedback.

A sense of transparency is the cornerstone to building trust and enables stakeholders to hold irresponsible organizations accountable for their actions.

Regulatory Sandboxes

Hunch

Engage with regulators through sandbox programs, where it is tangible to have controlled testing of AI systems before active deployment. These environments provide an avenue where valuable feedback can circumvent potential flaws and misuses. All the while, you reap the benefit of demonstrating commitment to compliance and a proactive sense of duty towards safe AI use.

Continuous Monitoring

If you are not actively tracking system performance, detecting drift, and identifying emerging risks, you are failing. Organizations must commit to thorough ongoing observation, as persistent monitoring is meant to capture both technical metrics and the exact impact of your AI implementation on relevant populations and demographics.

Automated Detection

To ensure your AI governance, consider tools that automatically identify bias, model drift, and performance degradation. Studies show that 233 AI-related incidents were documented in 2024, representing an exponential 56% increase from 2023.[*]

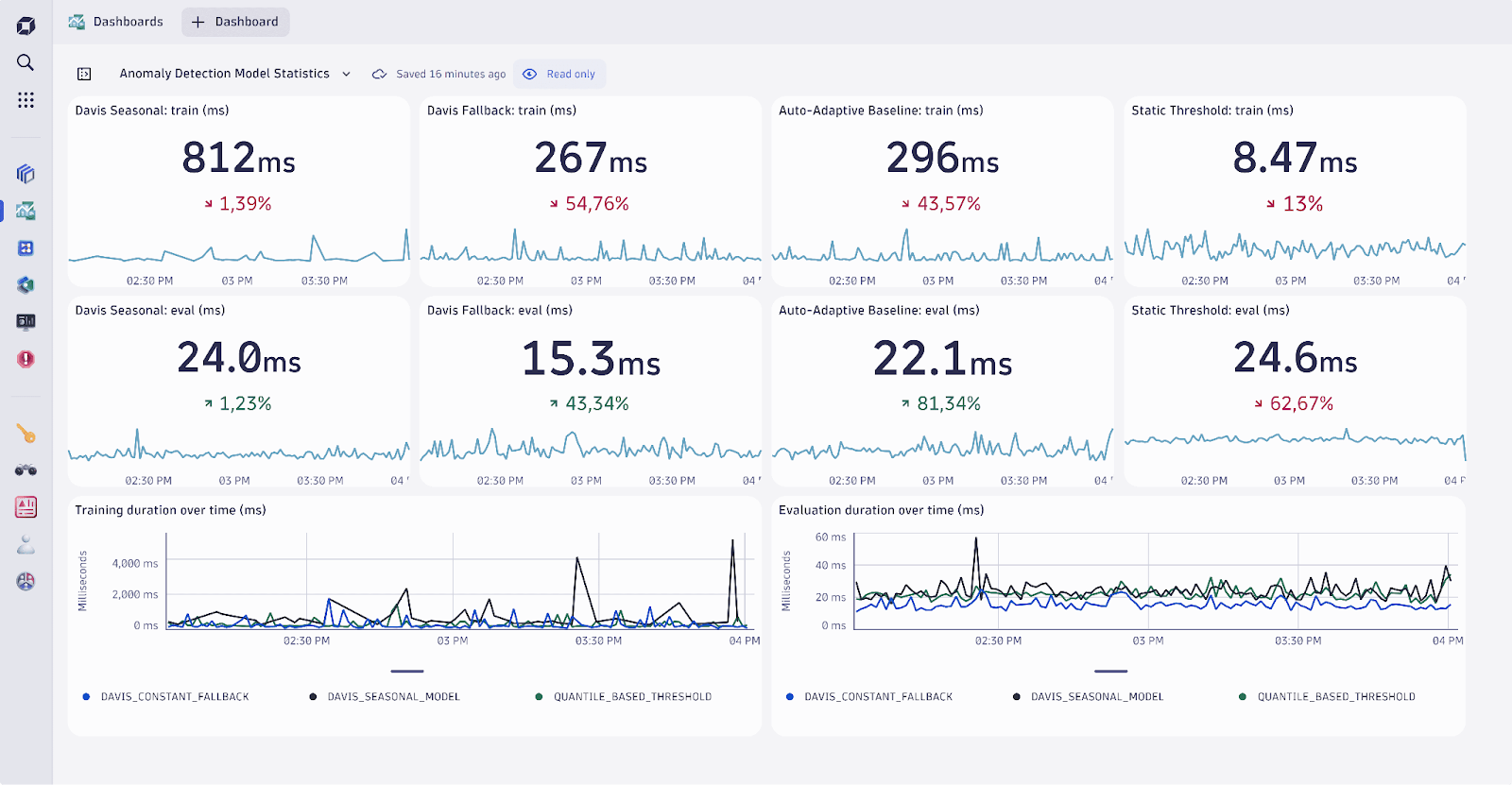

Visual Dashboards

Dynatrace Visual Dashboard

Create interfaces that make AI system behavior visible to non-technical stakeholders who also deserve understanding despite their lack of technical knowledge. Dashboards should present key metrics, risk indicators, and compliance status in accessible formats.

Audit Trails

Maintain a set of comprehensive records that document the totality of your AI system development. You must be able to justify deployment decisions while demonstrating a sense of operational performance. Audit trails are necessary to construct retrospective analysis in the event you must investigate incidents. These also demonstrate compliance with regulators.

Be a Responsible Leader with AI Governance

organization’s commitment to deploying ethical AI systems. Regulatory scrutiny has just started to kick into gear, and the public trust in AI overall is waning. It is now your responsibility as business leaders to comply with emerging AI governance frameworks from government regulators and internal organizational standards.

Get ahead of the game and commit to ethical principles. This means investment into cross-functional models that make it a team effort. You will also demonstrate dedication to accountability and transparency at all levels. If you act decisively today, you will earn trust, maintain a wide berth of competitive advantages, and shape how we responsibly evolve the usage of AI.